Internal tools vs production AI: where to draw the line

AI that helps your team work is different from AI that touches customers. The distinction matters more than most organizations realize.

We keep hearing the same story: teams move fast with AI internally, assume they can do the same when AI touches customers, then act surprised when something breaks publicly.

The issue is not that people do not understand AI risk. It is that they are not drawing a clear line between two very different uses.

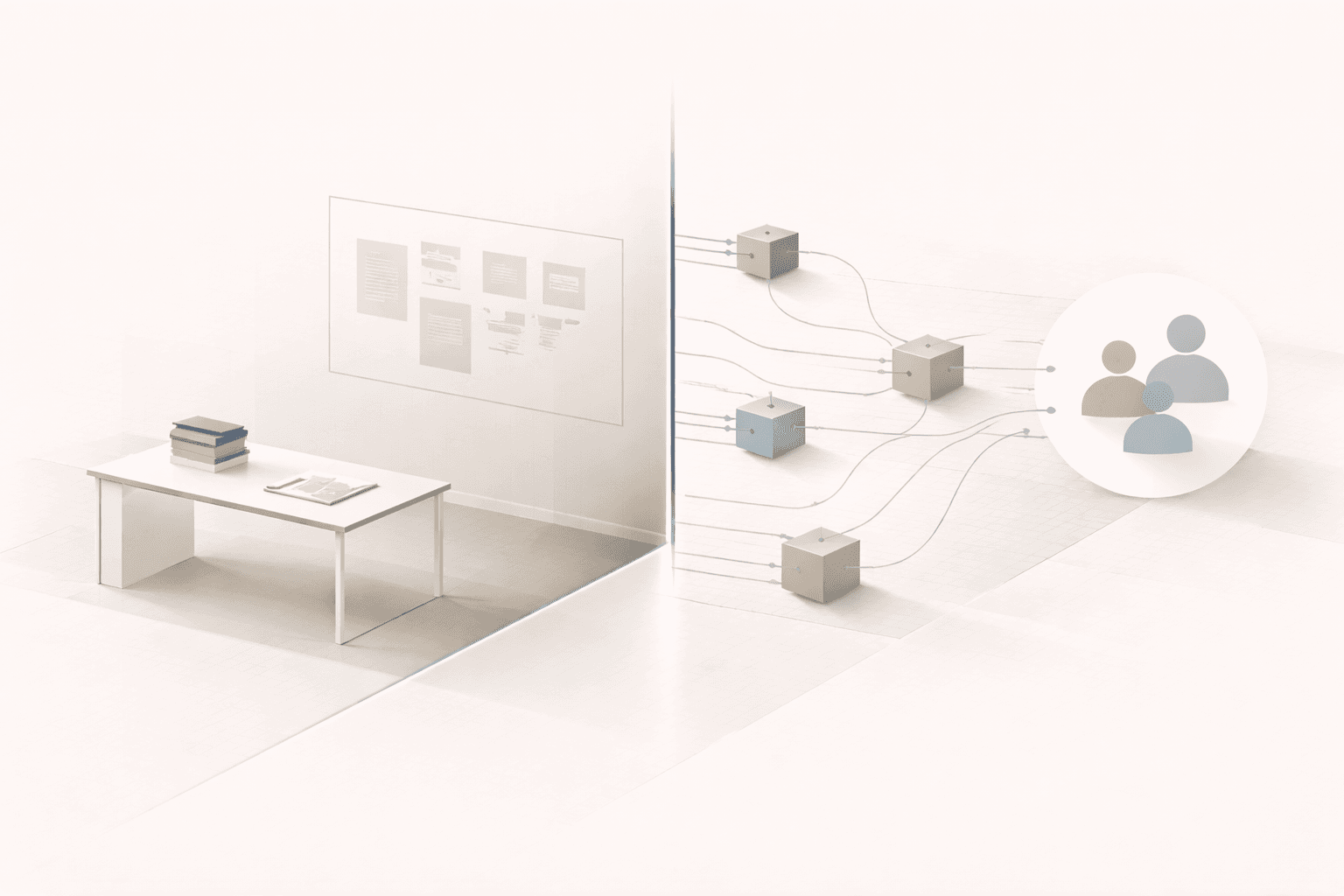

The distinction

Internal AI means AI that helps your team work. Code generation, draft writing, data summarization, research acceleration. A human sees the output before it matters.

Production AI means AI that touches customers or runs without human review. Recommendations, automated responses, decision systems, anything in a pipeline that executes without someone checking first.

The difference is not technical. It is about who catches the mistakes, and when.

Internal AI: speed is the point

When a developer uses Claude to write a function, they read the code before it ships. When someone uses ChatGPT to draft a customer email, they edit it before sending. The AI accelerates the work. The human stays accountable.

This setup is forgiving. You can experiment with models, iterate on prompts, and tolerate imperfection. If the output is wrong, you fix it. No one outside your team ever knows.

We encourage clients to move fast here. Try things. See what saves time and what does not. The cost of a bad output is a few minutes of rework.

What actually goes wrong:

The failure mode is not catastrophic. It is gradual. People stop reviewing carefully. "The AI is usually right" becomes "I did not check." Quality erodes slowly, and by the time anyone notices, the AI has been writing the first draft of everything for six months.

One client discovered their support team was copy-pasting AI responses without editing. The responses were mostly fine until one was not, and a customer screenshot ended up on social media.

The fix is not to slow down. It is to keep humans actually reviewing, not just clicking approve.

Production AI: different game entirely

When AI runs in production, there is no human in the loop. A recommendation engine serves thousands of customers before anyone reviews what it is doing. An automated classification system makes decisions at scale. Errors compound before you know they exist.

This demands a different posture. You need monitoring that catches drift. Fallbacks when confidence is low. Clear boundaries on what the AI can and cannot do. Someone accountable for its behavior, not just its deployment.

What actually goes wrong:

We've seen this play out with tagging, categorization, pricing, you name it. The AI works in testing. In production, edge cases accumulate, bad outputs flow downstream, and by the time anyone notices, the cleanup is painful.

The system wasn't the failure. The absence of monitoring was.

Where the line gets blurry

This is where most organizations get confused. Some systems look like internal tools but behave like production AI:

AI-assisted customer responses. If agents review before sending, it is internal tooling. If they click send without reading, it is production AI wearing a human mask.

Automated reports for leadership. If no one fact-checks the AI-generated summaries, executives are making decisions based on production AI output. They just do not know it.

Code generation in CI/CD. If AI-generated code merges without human review, you have put production AI in your deployment pipeline.

Data enrichment workflows. If AI is tagging, classifying, or transforming data that feeds other systems, errors propagate silently.

The question is always: who catches the mistakes, and how fast?

If the answer is "a customer" or "no one," you are in production AI territory whether you meant to be or not.

Drawing the line in practice

We work with clients to make this distinction explicit. For every AI system, we ask:

-

Who reviews the output before it matters? If the answer is "no one," treat it as production AI, even if it feels like a simple automation.

-

What is the blast radius if it is wrong? Internal embarrassment is recoverable. Customer-facing errors, compliance issues, or bad data in your systems are not.

-

Can you roll back? If AI output is creating records, training other models, or feeding downstream systems, undo gets complicated fast.

-

Who owns this? Not who deployed it. Who is accountable for what it does next week?

Most organizations have more production AI than they think. It is disguised as "automation" or "workflow optimization" or "just a script that runs overnight."

The operator's job is to find those systems and make sure they are governed appropriately before something breaks publicly.

Techabo helps organizations build AI systems with clear boundaries and appropriate controls. Learn about our approach.